Understanding the Recent Cloudflare Network Disruption

On November 18th, Cloudflare experienced an outage that affected website traffic worldwide. This disruption affected so many websites and services, including many local sites here along the Treasure Coast.

Timeline of the Outage

The disruption began in the morning hours and continued for several hours. Most connectivity issues were resolved by Mid-morning here in Melbourne, Vero Beach, and Fort Pierce, after roughly three hours of degraded performance. However, complete restoration of all affected downstream services wasn’t achieved until nearly six hours after the initial problem emerged.

A Configuration Bug

The cause of this outage was traced to an undetected software defect within Cloudflare’s bot management. Cloudflare has found no indication of a cyberattack, security breach, or malicious interference. This was purely a technical issue.

The bot management system plays a crucial role in Cloudflare’s traffic processing pipeline. Every incoming request to the network must pass through this detection layer. The problem occurred when a configuration file for this bot-detection service exceeded its anticipated capacity threshold. As entries accumulated in this file, it eventually exceeded system parameters that the software wasn’t designed to handle.

The Cascading Effect

When the oversized configuration file triggered the failure, it caused a crash in the core proxy infrastructure that manages traffic across multiple Cloudflare services. This wasn’t an isolated component failure—the bot management system’s position in the critical request path meant that its malfunction had ripple effects throughout the app.

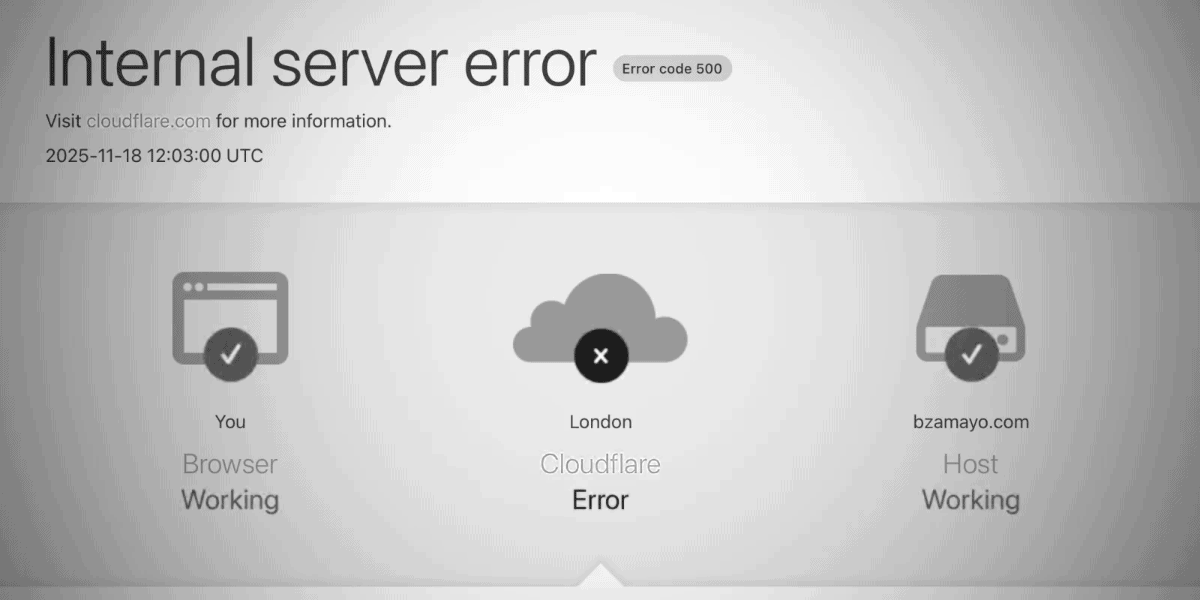

People trying to access websites and applications protected by Cloudflare saw HTTP 500 errors.

Resolution and Remediation Steps

Once they pinpointed the configuration file size issue within the bot management module, they implemented an immediate rollback strategy. By reverting to a previous version of the bot detection configuration—one that remained within acceptable size parameters—they were able to restore basic functionality.

After this experience, they have found a way to tweak the code so that it doesn’t experience such a catastrophic failure in the future.

Transparency

After the incident, Cloudflare published a pretty detailed blog post on its website documenting the issues encountered and how it handled the events throughout the morning.

Jones and Jones Advertising’s Continued Partnership with Cloudflare

Jones and Jones Advertising remains confident in Cloudflare for our DNS and CDN services, as well as protecting our sites from bad bots. This kind of thing can happen on just about any platform. How we respond to them defines us, I think Cloudflare did a great job.